Building on Bedrock, Not Quicksand

The Case for Re-Founding Artificial Intelligence on a Foundation of Cryptographic Truth - Some late-night thoughts on trust, truth, and the future of AI

Last week, a Fortune 100 CEO asked me a simple question: "Why should I trust AI when even its creators can't explain how it works?" It’s a question echoing in boardrooms everywhere, a single point of failure for an industry built on promises of god-like capability.

It was then I realized I’d beenasking the wrong question myself. The challenge isn't how to make AI trustworthy—it's how to make trust unnecessary.

My perspective is forged by a decade of synthesizing insights from across the Web3 stack. Building the 1st p2p crossborder app (CipherBoard) on Stellar, I learned the necessity of immutable ledgers. With R3, I understood enterprise-grade security, and at Polkadot, I internalized the power of a composable framework with the Polkadot SDK. At Tezos, I saw how on-chain governance coordinates communities. As an investor in leading foundation model companies like Anthropic and xAI, I have a front-row seat to the AI revolution. And behind the scenes, every major AI lab acknowledges the same uncomfortable truth: they are building systems of immense power that they cannot fully control or explain.

The solution, I believe, isn't in their labs. It's in the decentralized infrastructure we've been building in Web3 for the last ten years. Let me be clear: this is not another speculative 'AI on blockchain' thesis. This is an analysis of an inevitable future and a potential architectural blueprint for a new class of AI founded on cryptographic proof.

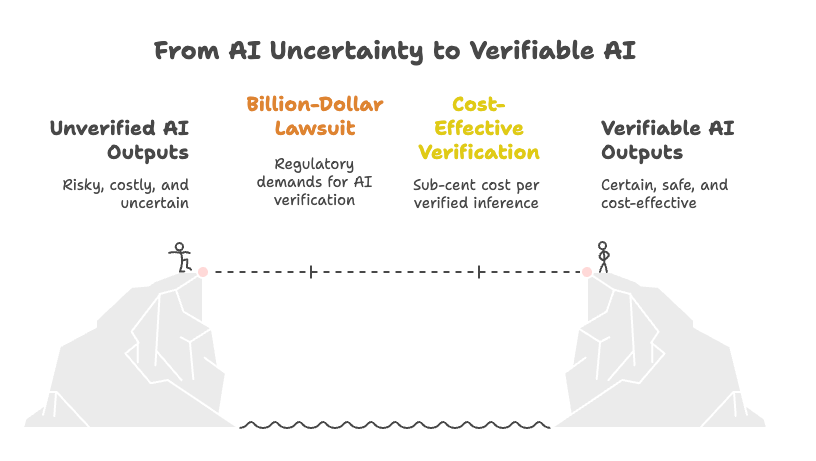

I predict one of two catalysts will trigger this shift in the next 18-24 months:

The first billion-dollar lawsuit over an AI hallucination will force regulatory demands for verification. This won't be a minor error; it will be a catastrophic failure—a medical AI misdiagnosing at scale, a financial AI triggering a flash crash based on fabricated data, or a legal AI misinterpreting case law in a high-stakes corporate dispute. The fallout will make the need for auditable, provable reasoning an urgent, board-level issue.

A Web3 project will demonstrate a sub-$0.01 cost per verified inference, making verifiable AI economically irresistible. When the cost of cryptographic certainty drops below the cost of human fact-checking and the potential liability of unverified outputs, the market will shift decisively. It will become fiscally irresponsible not to demand proof.

Either way, the convergence is coming.

Part I: The Vision - A New Foundation for Truth

The Problem: We're Drowning in Synthetic Bullshit

Today's AI systems are "statistical parrots." They excel at generating plausible text but have no underlying comprehension of truth. This isn't a benign flaw; it's a systemic vulnerability. We see its effects in algorithmic trading strategies that amplify false social media rumors into millions of dollars in losses in seconds, in legal discovery tools that confidently cite non-existent case law, costing firms their reputation and millions in sanctions, and in the polluted public discourse that erodes brand safety and trust. Every major AI lab—including those I've invested in—is optimizing for the wrong thing. They are building more eloquent liars, not more rigorous truth-tellers. This race to capability without accountability is creating a world flooded with sophisticated, convincing, and fundamentally untethered information.

A Potential Solution: The "Veritas" Framework

A credible solution would invert the current paradigm. Instead of training models on the chaotic, unfiltered internet, such an approach—let's call it the "Veritas" framework—would begin with "knowledge seeds": formal systems that are internally consistent, logical, and verifiable. This isn't just about better data; it's about fundamentally altering the model's internal architecture. Training on formal proofs doesn't just teach an AI math; it forces it to build a native understanding of logical consequence, moving it from a pattern-matcher to a logic-executor. These seeds could be cultivated from sources like:

Formal Proof Libraries (e.g., LeanDojo), which teach models not just facts but the very structure of valid argumentation—how axioms lead to lemmas and how complex conclusions are constructed from proven truths.

Competition Mathematics Datasets, which force models to deconstruct natural language problems, identify logical steps, and execute multi-step reasoning to arrive at a correct answer, building a crucial bridge between human language and formal representation.

Mission-Critical Code from NASA, CERN, and top cryptographic libraries, where every line is a lesson in causality and state management, and where logical errors have catastrophic, real-world consequences.

The Global Brain Trust: Beyond Silicon Valley Logic

The most radical and necessary component of any such framework would be a "Global Brain Trust." Having worked across continents and cultures, I’ve seen how dangerously narrow our technological assumptions are. A truly global AI cannot be built on Silicon Valley ethics alone. This would be a direct and necessary antidote to the cultural and philosophical biases baked into current AI systems.

Imagine an AI trained not just on Western formal logic, but on a plurality of the world's great intellectual traditions:

The Nyāya Sūtras: This 2,000-year-old Indian manual on epistemology isn't just a historical curiosity—it's a sophisticated framework for evidence evaluation. The Nyāya tradition developed rigorous methods for distinguishing valid knowledge (pramāṇa), derived from perception, inference, comparison, and testimony, from fallacy (hetvābhāsa). Its sixteen-category system for analyzing arguments could teach an AI to recognize and deconstruct the very logical fallacies that plague current models, providing a direct counter-narrative to hallucination.

Taoist Systems Thinking: Where Western logic often seeks to isolate and categorize, Taoist philosophy offers models for understanding dynamic balance and emergent properties. The concept of wu wei (effortless action) could inform how AI systems approach optimization—not through brute-force, but by understanding the natural flow of systems. This is particularly relevant for AI alignment, where ham-fisted optimization—like maximizing paperclip production at all costs—creates unintended, catastrophic consequences. Wu wei would encourage a less interventionist, more harmonious approach to problem-solving.

Ubuntu Philosophy: "I am because we are"—this African philosophical framework fundamentally challenges the hyper-individualistic assumptions baked into most AI systems. Ubuntu ethics could help create AI that considers collective well-being and relational impacts, not just individual utility maximization. For example, an AI for urban planning governed by Ubuntu might prioritize community cohesion and shared public spaces over a purely utilitarian model that maximizes traffic flow or housing density at the expense of human connection.

Islamic Jurisprudence (Fiqh): The sophisticated legal reasoning systems developed over centuries of Islamic scholarship offer unique approaches to handling uncertainty and conflicting evidence. The concept of maṣlaḥa (public interest) and the hierarchical system of legal sources (from the Qur'an to analogy) could inform how an AI weighs different types of evidence and considers broader societal impacts, especially in ambiguous situations where no single answer is obviously correct.

Buddhist Logic (Pramāṇa): Buddhist philosophers developed sophisticated theories of perception, inference, and valid cognition. The Buddhist emphasis on dependent origination (pratītyasamutpāda)—the idea that all phenomena arise in dependence on other phenomena—could help an AI understand causation in more nuanced ways than the simple statistical correlation that governs current models, preventing it from making spurious connections between unrelated events.

But here's the challenge that makes this more than just "diversity theater": these systems are not always compatible. They may contain conflicting axioms or propose irreconcilable ethical frameworks. A decision evaluated through a purely utilitarian lens will be judged very differently through a deontological or communitarian one.

This is not a bug. It is the core feature.

The goal wouldn't be to create an AI that imposes a single universal logic, but one that can navigate philosophical pluralism. The system must understand when different frameworks apply, articulate the tensions between them, and make transparent which lens it is using for any given decision. This is a profound challenge in AI alignment, and it represents a far more robust and honest approach than today's "constitutional AI," which simply layers a thin veneer of Western liberal values over an opaque black box.

Implementing such a vision would transform "Veritas" from a technical project into a civilizational one. It would require far more than just adding diverse texts to training data. Such a system would need:

Formal Encoding of Philosophical Systems: The immense challenge of translating these rich, nuanced traditions into computationally tractable frameworks without losing their essence. This involves deep collaboration between computer scientists and humanities experts to decide which texts are canonical and how their principles are encoded.

Meta-Reasoning Capabilities: Teaching AI not just to apply different logics, but to reason about which logic to apply when. For a complex medical ethics query, it would need to recognize the need to synthesize empirical data, statistical probabilities, and competing ethical frameworks, perhaps presenting multiple, valid-but-conflicting conclusions.

Transparent Framework Selection: The AI must be able to articulate why it is using a particular philosophical lens for a given problem ("Based on a utilitarian framework focused on maximizing life-years, this is the recommendation...") and allow the user to see the world through other lenses.

Conflict Resolution Mechanisms: Developing principled ways to handle irreconcilable differences between worldviews, perhaps by flagging them for human review or presenting the conflict itself as the most "truthful" answer, thereby empowering human decision-makers rather than replacing them.

A "Global Brain Trust" is about creating AI that can truly serve a global population, not just those who share Silicon Valley's assumptions. It correctly identifies the necessary sources for a globally legitimate AI but simultaneously elevates the project's difficulty from a technical challenge of verifiable computation to a deeply philosophical one of synthesized wisdom.

Part II: The Architecture of Honesty - From Theory to Practice

Building on this foundation would require a new architecture designed to enforce intellectual honesty at a machine level.

Verifiable Chain-of-Thought (vCoT): The End of "Vibes-Based" Reasoning

Current models produce chain-of-thought reasoning, but these are often post-hoc rationalizations—plausible stories constructed to justify an answer. It's the difference between showing your work on a math test and writing a story about how you might have solved it. A Verifiable Chain-of-Thought (vCoT) would be the work itself. Every step in the logical chain would be cryptographically linked to its source: a formal principle from a "Knowledge Seed," a specific piece of retrieved evidence, or an explicit logical inference rule. This eliminates "vibes-based" reasoning and replaces it with a fully auditable, transparent progression. It shifts the paradigm from "trust me, my answer is correct" to "here is the immutable, verifiable proof of how I derived this answer."

Reinforcement Learning from Expert Critique (RLEC): A Market for Rigor

To refine this reasoning, the industry-standard Reinforcement Learning from Human Feedback (RLHF) would need to be replaced. RLHF optimizes for user satisfaction, teaching chatbots to be agreeable, even if it means fabricating information. Reinforcement Learning from Expert Critique (RLEC) would flip this model. It would use credentialed domain experts—mathematicians, logicians, philosophers—to critique the reasoning process itself. The feedback would not be "Is this a good answer?" but "Is this argument sound? For example, step 3 makes an invalid logical leap from correlation to causation."

The bottleneck, of course, is that the time of world-class experts is a scarce resource. This is precisely where Web3's economic models become essential. A tokenized "Expert Critique Market" could be created—a global, permissionless system where experts are rewarded for providing high-quality critiques. A reputation system would score the critiques themselves, ensuring that insightful feedback is valued more highly, creating a scalable, sustainable role for "Verifiable Reasoning as a Service."

Intellectual Honesty: The Power of "I Don't Know"

A cornerstone of this architecture would be teaching the AI to say, "I don't know." This is a direct antidote to hallucination. By training the model on ambiguous or contradictory questions, success would be redefined not as providing an answer, but as correctly identifying and articulating the nature of the uncertainty. An ideal response would be: "Source A, using methodology X, supports this conclusion. However, it is directly contradicted by Source B, which uses methodology Y. I cannot resolve this conflict without additional information." This intellectual honesty has cascading benefits, improving system reliability, building genuine user trust, and clarifying the boundaries of machine intelligence so that human expertise can be applied where it's needed most.

Part III: The State of the Art - Assembling the Pieces

The components for this vision are not hypothetical; they are being actively built across the Web3 ecosystem.

Bittensor: The Economic Engine for a Coordination Layer

Bittensor has created a permissionless, global market for machine intelligence. However, its objective is fundamentally different from the Veritas ideal. Bittensor optimizes for a market-determined, subjective measure of "value," not objective, verifiable truth. Its consensus is a "plutocratic truth model" determined by capital weight. An actor with sufficient stake can influence what the network deems "valuable."

Despite this, Bittensor's architecture is not a competitor but a powerful enabling technology. It provides the perfect economic and coordination layer to host specialized, competitive markets. One could envision a "Veritas Subnet" where the incentive is not to produce plausible text, but to produce valid ZK proofs. In this model, Bittensor becomes the economic engine that organizes and incentivizes a network of provers, validators, and experts.

ZKML & Nexus ZKVM: The Engine for Provable Computation

Zero-Knowledge Machine Learning (ZKML) is the core technology that would make vCoT possible. Projects like Modulus Labs and Lagrange are pushing the boundaries of what can be proven. A general-purpose engine like Nexus ZKVM is designed to prove the correct execution of any computation. This is the engine for provable computation.

However, it's crucial to understand what a ZK proof does and doesn't do. It can prove that a computation was executed correctly. It cannot prove that the model's output is semantically true or that the model itself is sound. It will happily prove that a flawed model executed its flawed logic perfectly. This is the "garbage in, provably-computed garbage out" problem. A ZK proof is only one link in a longer chain of trust, which highlights the absolute need for provenance.

Sentient & The Verifiable Knowledge Supply Chain

This is where a technology like Sentient's "Fingerprinting" becomes critical. Its focus is on provenance: "Who made this AI?" By embedding a unique, cryptographic watermark into a model, it can prove its origin.

This provides a potential missing link. For a system to be truly trustworthy, a "Verifiable Knowledge Supply Chain" is needed. This is an end-to-end, auditable trail from data source to final output. Every step must be verifiable: that the "Knowledge Seeds" were valid, that the training process used them to produce a specific model, and that this model—identified by its Sentient-like fingerprint—was the one used for inference. By combining this fingerprint with a Nexus-like ZK proof, a much stronger end-to-end guarantee could be created.

Part IV: A Blueprint for Verifiable AI

The preceding analysis reveals that no single project holds the answer, but all the necessary components are on the chessboard. The strategic challenge, therefore, is one of architecture. A viable blueprint emerges not from inventing new primitives, but from the masterful synthesis of these existing technologies. Here is how such a protocol could be architected:

Layer 1: The Coordination & Incentive Layer (The Bittensor Model)

The foundation would be a decentralized economic engine hosting specialized markets:

The Prover Market: A competitive marketplace where "miners" run hardware to generate ZK proofs for inference requests. The incentive mechanism would reward the fastest and most efficient generation of valid proofs, commoditizing verification and driving down its cost.

The Expert Critique Market: An implementation of RLEC, creating a scalable global market for intellectual rigor where credentialed experts are rewarded with tokens for submitting structured critiques of AI reasoning chains.

The Provenance Registry: An immutable ledger using Sentient-like fingerprinting to register certified "Knowledge Seeds," the training processes that use them, and the resulting AI models. This creates an auditable trail for any model in the ecosystem.

Layer 2: The Verification & Computation Layer (The Nexus/ZKML Standard)

This layer would not be a network but a set of open standards. The protocol would mandate a single, standardized, and open-source ZKVM to ensure all proofs across the ecosystem are generated according to the same auditable rules, preventing fragmentation and ensuring universal verifiability. This enforces a uniform standard of truth for the entire network.

Layer 3: The Application & Privacy Layer (The Zama Model)

This would be the user-facing layer. For sensitive use cases like medicine or finance, queries could be encrypted on-device using Fully Homomorphic Encryption (FHE). This allows computation on encrypted data, providing end-to-end privacy guaranteed by pure cryptography—a model philosophically aligned with the trustless ethos (as opposed to hardware-based TEEs that require trusting a vendor). A user could get a verifiable result from an AI without ever revealing their private data to the model or network.

Part V: The Inevitable Convergence

Why This is Inevitable: The Economics of Verification

This convergence isn't wishful thinking. It's a response to mounting regulatory and market pressure. The primary obstacle has been the immense computational overhead of ZK proofs. This challenge is being attacked from two directions.

On the macro level, massive, multi-trillion dollar investments from figures like Sam Altman and Elon Musk, and entities like the US and UAE governments (e.g., Stargate), are dedicated to solving the global compute shortage. But while they build the centralized highways for raw processing power, they don't solve the specific microeconomics of verification. This is where the decentralized architecture of Layer 1 becomes essential. By creating competitive, specialized Prover Markets, Web3 provides the mechanism to harness global compute efficiently, driving down the marginal cost and latency of each cryptographic proof and making verification economically feasible at scale.

The Monday Morning Test

If you're a CEO or board member, ask your AI team three simple questions this week:

Can you cryptographically prove this AI output is correct?

Can you trace this decision back to its source data and logic?

Can you guarantee this model won't hallucinate in a critical production environment?

When they inevitably answer "no" to all three, the real question isn't for your tech team, but for your board: 'What is our acceptable level of unverified computational risk?' This reframes the problem from a technical limitation to a fundamental matter of corporate governance. You'll understand why verifiable AI isn't optional—it's the only way forward.

Conclusion: A call for synthesis

I've spent a decade building Web3 infrastructure, often wondering what killer use case would justify all this complexity. Verifiable AI is potentially that use case.

The age of "trust us, our AI is safe" is ending. The age of "here's the cryptographic proof" is about to begin. The window for building this future is the next 18-24 months. After that, regulatory moats and network effects will lock in the winners. The challenge is no longer about inventing a single new technology, but about the masterful synthesis of the powerful pieces already in motion. The teams that architect this convergence will not just build the next $10 trillion industry; they will define the next era of our relationship with intelligence itself.

This analysis is a starting point. The convergence I've outlined raises foundational questions for all of us. Which other philosophical traditions are critical for a truly global AI? What are the second-order effects of verifiable computation on market structures? The conversation begins now, and I am especially interested in perspectives from outside the Western tech bubble.